Deep Dive into CuresDev Tokenization Technology

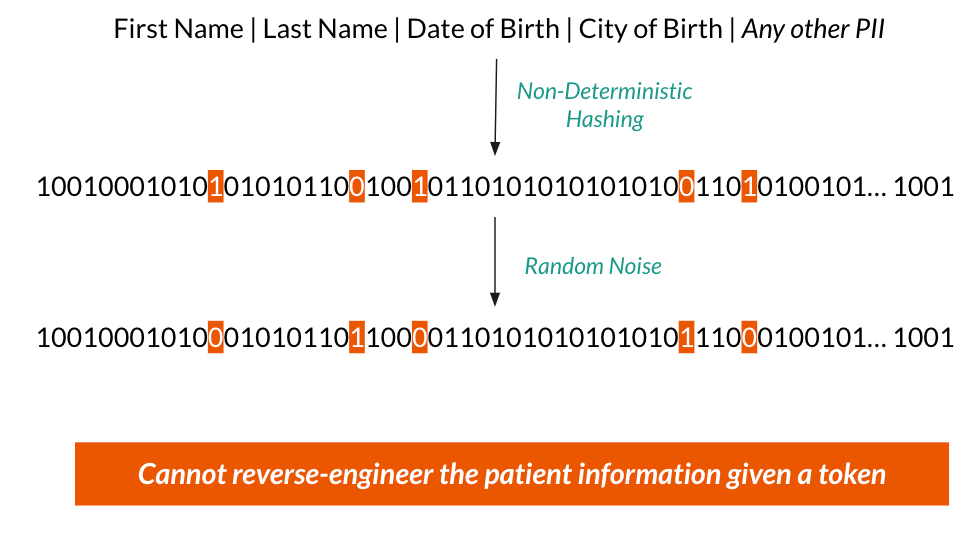

Convert patient information to a strong of zeroes and ones with random noise added for additional privacy

CuresDev is building a data sharing network specifically for rare diseases. The network allows sharing of data at the patient-level to form a longitudinal view of the patient journey. CuresDev enables sharing in a privacy-sensitive manner using a novel technology called Tokenization. In this post, we will deep dive into our Tokenization technology using a demo application.

Why do we need tokens?

Suppose you want to merge a natural history database and a clinical trials database to understand the patient journey before and after treatment. We want to understand the patient journey but not necessarily identify who the patient was. Without exchanging patient identifying information such as name, date of birth etc, it is not possible to link records of the same patient from the two databases. In a previous post, I provided a detailed overview of possible solutions to this problem. Many of these solutions suffer from drawbacks such as poor privacy, limited geographic availability among others. This article covers the drawbacks in detail.

Tokenization Preserves Privacy

Tokenization is the process of converting patient identifying information into an anonymous representation of the data called Tokens. The tokens contain just enough information to know whether two tokens belong to the same patient or not. In the above example, the two databases would generate and share tokens in order to link the records of same patients together.

CuresDev has developed a novel tokenization technology that first converts patient information into a 1000 length string of zeroes and ones. We use a non-deterministic hashing technology called Bloom Filters to generate the string. In order to preserve privacy, we randomly mutate about 5% to 15% of the bits. Every time the tokenization process is run, it produces a different token for the same patient. This ensures the tokens cannot be reversed back to the original patient information.

CuresDev Tokenization technology is unique in many ways:

Tolerates misspellings and misspellings

Provides strong privacy guarantees using Differential Privacy

Fully Open Source, allowing anyone to verify the privacy guarantees of our technology - https://github.com/curesdev

Works fully offline and never transmits personally information to CuresDev servers

Works globally and complies with global privacy laws

Prevents Re-Identification attacks

Demo of CuresDev Tokenization

The following demo dives deep into how the tokenization algorithm produces a token from a simple string, such as name.